The first time I had a proper conversation with an AI chatbot, I felt a bit like I was talking to my diary, if my diary could talk back and occasionally knew more about quantum physics than I did. It was liberating, really. No judgment, no interruptions, just pure conversation. But then, somewhere between asking it to help me write a birthday card and confessing my complete bewilderment at TikTok, a thought crept in: where is all this going?

That question, my friends, is what we’re here to unpack today. Because whilst these AI platforms are brilliant, useful, and sometimes eerily insightful, we need to have a proper chat about whether we should be treating them like our best mate down the pub or more like that neighbour who’s lovely but definitely gossips.

Why This Matters More Than You Think

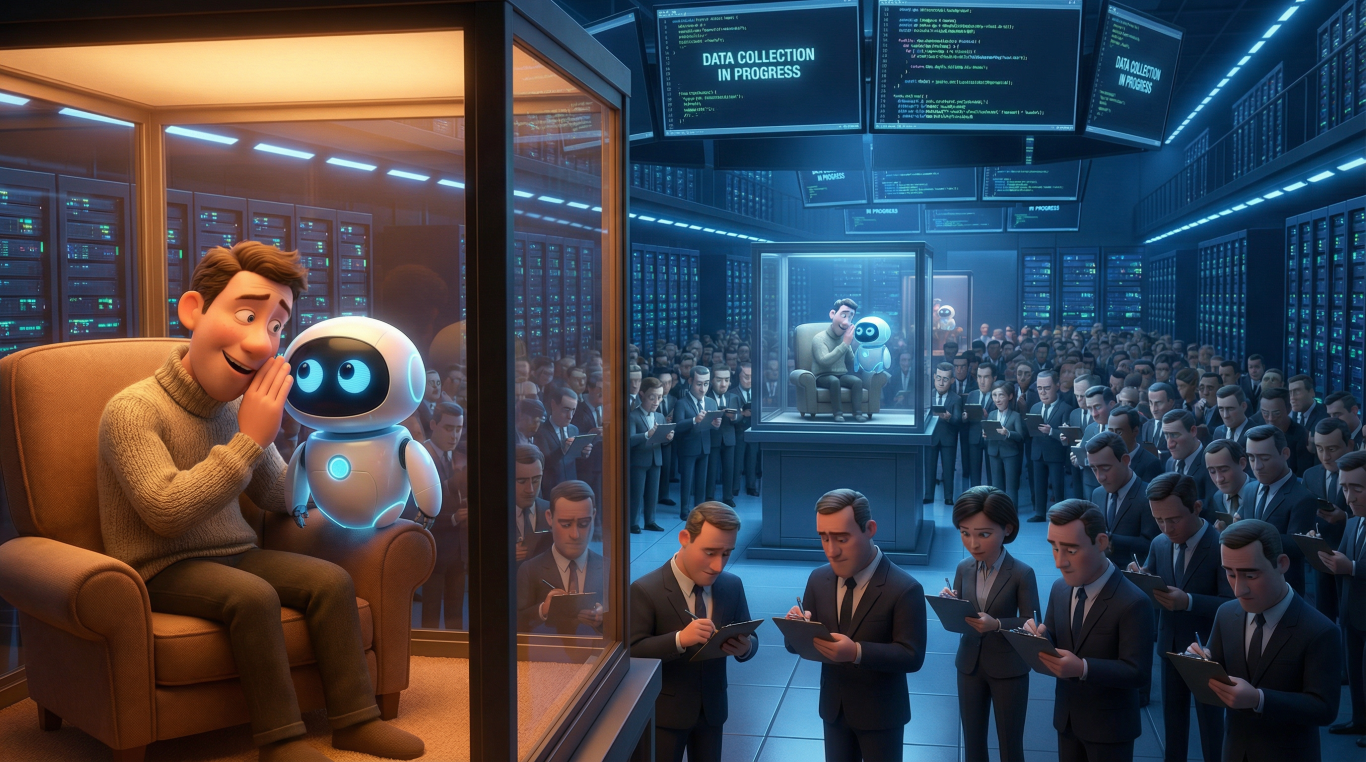

Let me paint you a picture. You’re chatting with an AI assistant about something personal, maybe health concerns, family troubles, or financial worries. It feels private, doesn’t it? Just you and your glowing screen. But here’s the thing: AI privacy concerns aren’t just for the tinfoil hat brigade anymore. They’re real, they’re relevant, and they affect every single one of us who’s ever typed a question into ChatGPT, Claude, or any of their digital cousins.

The technology is brilliant, transformative even. These AI platforms can help us write, learn, create, and solve problems we couldn’t crack on our own. But unlike that chat with your trusted friend over coffee, your conversation with an AI might be stored, analyzed, or even used to train future versions of the system. And that changes everything.

What AI Platforms Are Actually Used For (And What They’re Not)

Right, let’s get practical. These AI conversation platforms are genuinely useful for loads of things. People use them for writing assistance, learning new skills, brainstorming ideas, getting quick answers to questions, translating languages, and even debugging computer code. I’ve used them to help plan holidays, understand confusing medical jargon from my GP, and yes, to settle arguments about whether it was Roger Moore or Sean Connery in that particular Bond film (it was Moore, by the way).

What they’re NOT meant for, though some people don’t realize this, is storing your passwords, processing your credit card details, or replacing actual professional advice from doctors, lawyers, or therapists. They’re not secure vaults. They’re not confidential in the legal sense. And whilst they’re clever, they’re not infallible.

The reason for these limitations comes down to how they work and how they’re built. These systems are designed to be helpful and conversational, not to be Fort Knox. They’re more like that helpful person at the information desk than the bank manager in the secure vault.

The Evolution of AI Conversation Platforms

The journey from early digital assistants to today’s AI platforms is like comparing a flip phone to a smartphone. Same basic idea, completely different execution.

The Early Days: Chatbots That Couldn’t Really Chat

The first chatbots were, let’s be kind, a bit rubbish. We’re talking about the early 2000s here, with systems like ELIZA (actually from the 1960s, but it set the template) and SmarterChild on MSN Messenger. They worked on simple pattern matching. You’d type something, and they’d recognize keywords and spit back pre-programmed responses. It was like talking to someone who only half-listened and responded with phrases they’d memorized.

The Machine Learning Era: Getting Smarter

Things started improving in the 2010s with machine learning. Instead of just matching patterns, these systems could actually learn from data. Think of it like the difference between following a recipe exactly versus understanding cooking well enough to improvise. These systems could handle more complex conversations, but they still felt robotic. You knew you weren’t talking to a person.

The Transformer Revolution: The Game Changer

Then, around 2017, something called the “Transformer” architecture came along. No, not the robots in disguise, though the name is equally dramatic. This was a new way of processing language that let AI understand context much better. It’s like the difference between understanding individual words versus understanding the whole story.

Today’s AI: ChatGPT, Claude, and Friends

This brings us to the current generation, the ones you’re probably using. ChatGPT launched in November 2022 and basically broke the internet. Suddenly, AI could have conversations that felt natural, understand nuance, admit mistakes, and even crack jokes (some of them actually funny). Other platforms like Claude, Google’s Bard (now Gemini), and Microsoft’s Copilot followed.

The benefit over previous versions is night and day. These systems understand context across entire conversations, can handle complex reasoning, adapt their tone and style, and provide detailed, nuanced responses. It’s the difference between asking directions from someone who barely speaks your language versus chatting with a knowledgeable local.

How These AI Platforms Actually Work

Right, deep breath. I’m going to explain this without making your eyes glaze over, I promise.

Imagine you’re teaching a very dedicated student who’s read essentially the entire internet. That’s step one. These AI systems are trained on massive amounts of text from books, websites, articles, and conversations. They learn patterns in language, how words relate to each other, what usually follows what, and how humans typically communicate.

When you type a message, the system doesn’t understand it the way you and I understand things. It doesn’t have feelings or consciousness. Instead, it breaks your message down into tiny pieces, analyzes the patterns, and predicts what words should come next based on everything it’s learned. It’s like a phenomenally sophisticated autocomplete.

Here’s the process, step by step:

First, your message gets broken down into tokens (think of these as word fragments). Then, the system analyzes these tokens in relation to each other, understanding context and meaning through mathematical relationships. It’s doing millions of calculations to understand what you’re asking. Next, it generates a response by predicting the most appropriate words to come next, one after another, based on the conversation so far and its training. Finally, it presents you with what seems like a thoughtful, human-like response.

The clever bit, and the bit that raises those chatbot security risks, is that this all happens on servers owned by the company running the AI. Your conversation travels over the internet to their computers, gets processed, and comes back to you. It’s not happening on your device. It’s happening in what we call “the cloud,” which is just a fancy way of saying “someone else’s computer.”

What the Future Holds

If I had a crystal ball, I’d probably be on a beach somewhere rather than writing this, but we can make some educated guesses about where AI conversations are heading.

We’re likely to see AI become more personalized, remembering your preferences and history better (which is both convenient and slightly worrying from a privacy standpoint). They’ll probably get better at understanding emotions and context, making conversations feel even more natural. We’re already seeing AI being built into everything from your phone to your car to your fridge, though I’m not entirely sure I need my fridge to chat with me about my cheese choices.

The technology will likely become more specialized too. Instead of one general AI that does everything adequately, we’ll have AI experts in specific fields, like medical AI, legal AI, or creative AI, each trained specifically for their domain.

But here’s what concerns me about the future: as these systems become more conversational and helpful, we’ll naturally trust them more. We’ll share more personal information, rely on them for more important decisions, and integrate them deeper into our lives. And that makes the question of AI data privacy absolutely critical.

The Big Question: Security, Vulnerabilities, and Why You Should Care

Now we get to the meat of it. Should you trust these platforms with your private conversations? The answer is complicated, like most honest answers are.

Let’s start with what happens to your data. When you chat with most AI platforms, your conversations are typically stored on their servers. Some companies say they use these conversations to improve their AI systems, which is a polite way of saying your chat about your embarrassing rash might help train the next version of their AI. Other companies claim they don’t use your data for training, but they still store it for a period of time.

Here’s where AI privacy concerns get real. First, there’s the question of data breaches. Any company storing data can be hacked. It’s not about if, it’s about when. If someone breaks into these servers, your conversations could be exposed. Imagine all those questions you asked, all those personal details you shared, suddenly readable by strangers. Not ideal.

Second, there’s the issue of who has access to your data within the company itself. Employees might be able to read conversations for quality control or safety monitoring. Your private chat isn’t necessarily as private as you think.

Third, there’s the legal side. Depending on where you live and where the company is based, your conversations might be subject to legal requests from governments or law enforcement. That chat you had isn’t protected by the same confidentiality rules as, say, a conversation with your doctor or lawyer.

Then we’ve got the chatbot security risks around the AI itself being manipulated. Clever users have found ways to “jailbreak” AI systems, making them reveal information they shouldn’t or behave in ways they weren’t designed to. If someone can manipulate the AI, they might be able to access or infer information about other users’ conversations.

There’s also the risk of the AI itself being wrong or misleading. These systems can “hallucinate,” which is the technical term for making stuff up confidently. If you’re relying on AI for important information and don’t verify it, you could make decisions based on complete nonsense delivered in a very convincing tone.

What You Can Actually Do About It

I’m not here to scare you off using these tools entirely. They’re too useful for that, and I’d be a hypocrite since I use them regularly. But I am here to help you be smart about it.

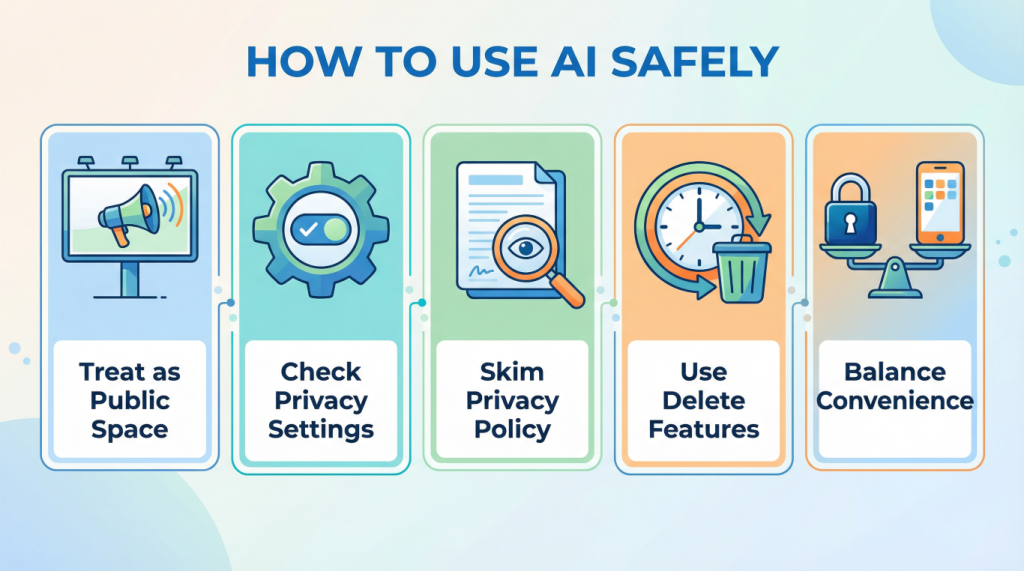

First, treat AI conversations like you’re talking in a public space, not your bedroom. Don’t share anything you’d be mortified to see on a billboard. No passwords, no financial details, no deeply personal medical information that could identify you.

Second, check the privacy settings. Most platforms let you opt out of having your data used for training. It’s usually buried in settings, but it’s worth finding. Some platforms offer ways to delete your conversation history.

Third, read the privacy policy. I know, I know, nobody reads those. But skim it at least. Look for sections about data retention, who can access your data, and how they use it. If a company isn’t transparent about this, that’s a red flag.

Fourth, use the privacy features your platform offers. Many now have options for temporary chats that aren’t saved, or ways to delete conversations. Use them for sensitive topics.

Fifth, remember that convenience and privacy are often at odds. The more personalized and helpful you want your AI to be, the more data it needs about you. Decide what trade-off you’re comfortable with.

My Take on All This

Look, I love these AI platforms. I really do. They’ve made my life easier in countless ways. But I also think we’ve been a bit too quick to treat them like trusted confidants without really understanding what that means.

The technology isn’t the problem. The problem is that we’re using 21st-century AI with 20th-century assumptions about privacy and security. We assume our conversations are private because they feel private, but feeling and being are two different things.

I’m not saying don’t use AI chatbots. I’m saying use them wisely. Be aware of what you’re sharing and where it’s going. Think of it like this: you wouldn’t discuss your bank details loudly on a crowded bus, even though technically those strangers aren’t supposed to be listening. Apply the same logic to AI conversations.

The companies building these platforms need to do better too. They need clearer privacy policies, stronger security, and more transparent practices. But until they do, the responsibility falls on us to protect ourselves.

Wrapping This Up

So, should you trust AI platforms with your private conversations? The honest answer is: sort of, but not completely, and definitely not blindly.

These platforms are powerful tools that can genuinely improve your life. They can help you learn, create, and solve problems. But they’re not your therapist, your lawyer, or your best friend. They’re commercial products run by companies that have their own interests, which don’t always align perfectly with your privacy.

The key AI privacy concerns aren’t going away anytime soon. If anything, they’re going to become more complex as AI gets more sophisticated and more integrated into our daily lives. The chatbot security risks are real, and the questions around AI data privacy are only going to get more pressing.

But here’s the thing: you don’t have to choose between using this brilliant technology and protecting your privacy. You just have to be smart about it. Share what you’re comfortable sharing. Use privacy settings. Stay informed about how these platforms work and what they do with your data. And most importantly, never assume that just because something feels private, it actually is.

Technology moves fast, but common sense is timeless. Trust these AI platforms to help you with tasks, to answer questions, to make your life a bit easier. But trust yourself more. Trust your instincts about what to share and what to keep to yourself.

And if you’re ever unsure whether something is too personal to share with an AI, ask yourself this: would I be comfortable with this conversation being read by a stranger? If the answer is no, then maybe keep that one to yourself.

Stay curious, stay cautious, and stay in control of your own data. That’s the best advice I can give you.

Walter

Disclaimer: This article represents general information about AI privacy and security as of 2025. Privacy policies and security practices vary between platforms and change over time. Always check the current privacy policy of any AI platform you use.

Leave a Reply