Why This Matters More Than You Think

I’ll be candid with you. Something strange has been happening to Google lately, and I’m not the only one who’s noticed. You know that feeling when you search for something, anything really, and the first page of results looks like it was written by someone who learned English from a manual? Or worse, when you’re looking for a genuine restaurant review and instead get ten websites all saying the exact same thing in slightly different words?

Welcome to what internet folk are calling the dead internet theory, and it’s making Google searches about as useful as a chocolate teapot.

This isn’t just tech nerds being dramatic. This affects every single one of us who uses the internet to find information, which, let’s face it, is pretty much everyone. Whether you’re looking for a plumber, researching a health concern, or trying to find an actual human opinion about whether that new coffee maker is worth buying, you’re running headfirst into a wall of AI generated content that’s about as helpful as asking your cat for directions.

The Google search quality decline isn’t some minor inconvenience. It’s fundamentally changing how we access information, and not for the better. I’ve been using Google since the early 2000s, and I can tell you, something’s gone properly wonky.

What We’re Actually Dealing With

Let me paint you a picture. The dead internet theory suggests that most of the internet content we see today isn’t created by real people anymore. It’s generated by bots, artificial intelligence, and automated systems, all designed to game search engines and make money from advertising. The theory goes that genuine human interaction online has become the minority, drowned out by an ocean of synthetic content.

Now, is the entire internet dead? No, that’s a bit dramatic. But the core concern is real. AI generated content has flooded Google’s search results to the point where finding authentic, human-written information feels like panning for gold in a river of mud.

This technology, or rather this phenomenon, is used primarily for making money. Content farms use AI to pump out thousands of articles designed to rank in Google searches. They don’t care if the information is useful. They care about clicks, advertising revenue, and affiliate commissions. What it’s definitely not used for is making your life easier or helping you find better information, despite what anyone might claim.

The Good Old Days (Yes, I’m Going There)

Remember the Yellow Pages? That enormous book that got dumped on your doorstep every year? Before Google, that’s how we found things. Or we asked friends. Or we went to the library and used something called the Dewey Decimal System, which I’m convinced was designed by someone who enjoyed watching people suffer.

When we needed information, we had encyclopedias. Britannica was the gold standard. You wanted to know about Napoleon? You pulled out the N volume and read an article written by an actual historian. The information was curated, fact-checked, and written by humans who knew what they were talking about.

Then came the early internet and search engines like AltaVista, Ask Jeeves, and Yahoo. These were clunky, but they were trying. You’d type in a question and get maybe ten relevant results if you were lucky. The internet was smaller then, more like a village where everyone knew everyone. Websites were made by enthusiasts, academics, and people who genuinely wanted to share information.

How Google Went From Hero to Villain

Google launched in 1998, and honestly, it was like magic. Two Stanford students, Larry Page and Sergey Brin, created an algorithm called PageRank that ranked websites based on how many other sites linked to them. The idea was brilliant: if lots of reputable websites link to you, you’re probably worth reading.

In those early days, Google was clean, simple, and it worked beautifully. You typed in what you wanted, and boom, there it was. The first result was usually exactly what you needed. It felt like having a really smart friend who knew everything.

By the mid-2000s, Google had become the dominant search engine. They introduced features like Google Images, Google Maps, and Google News. Each update made searching easier and more comprehensive. The algorithm got smarter, learning to understand context and user intent. If you misspelled something, Google knew what you meant. Revolutionary stuff.

But here’s where things get interesting. As Google got better, people got craftier. Enter Search Engine Optimization, or SEO. Website owners figured out they could manipulate their content to rank higher in Google searches. At first, it was relatively innocent, just making sure your website was well-structured and used relevant keywords.

Then came the content farms. Around 2010, companies realized they could create websites stuffed with low-quality articles targeting specific search terms. These weren’t meant to be helpful. They were meant to rank in Google, attract clicks, and generate advertising revenue. Demand Media and Associated Content churned out thousands of articles daily, paying writers pennies to create superficial content about everything under the sun.

Google fought back with algorithm updates. The “Panda” update in 2011 specifically targeted low-quality content. The “Penguin” update in 2012 went after manipulative link-building. For a while, it seemed like Google was winning. Search quality improved. The rubbish got pushed down.

But then something changed. Around 2015-2017, Google started prioritizing different things. They wanted to keep people on Google longer, so they introduced featured snippets, knowledge panels, and direct answers. Sounds good, right? Except these features often pulled information from those same low-quality websites, and users never clicked through to verify the source.

The real catastrophe started around 2022-2023. That’s when AI content generators became accessible to everyone. Tools like ChatGPT and Jasper made it possible to create hundreds of articles in hours. And I mean articles that looked legitimate, with proper grammar and structure. The content farms went into overdrive.

Suddenly, Google’s search results became flooded with AI generated content. Every search query returned pages and pages of similar-sounding articles, all saying roughly the same thing, none offering real insight or human experience. The dead internet theory stopped being a conspiracy and started feeling like Tuesday.

How This Whole Mess Actually Works

Let me walk you through what happens when you search for something on Google today, because understanding this will help you see why everything’s gone pear-shaped.

You type in a search query, let’s say “best vacuum cleaner for pet hair.” Google’s algorithm springs into action. It looks at billions of web pages, analyzing hundreds of factors to decide what to show you. These factors include keywords, website authority, user engagement, loading speed, mobile-friendliness, and about 200 other things Google won’t fully disclose.

Here’s the problem: AI content generators have learned to tick all these boxes. They use the right keywords, structure articles properly, include relevant headings, and even generate “user-friendly” content that passes Google’s readability checks. To Google’s algorithm, these AI-generated articles look legitimate.

Meanwhile, content farms have industrialized this process. They use AI to research trending topics, generate articles, publish them across multiple websites, and even create fake user engagement signals. Some use networks of websites to link to each other, artificially boosting their authority in Google’s eyes.

The algorithm can’t reliably distinguish between an article written by someone who’s actually used fifteen vacuum cleaners and an AI-generated piece that’s scraped information from other websites and regurgitated it in slightly different words. Both might have proper structure, relevant keywords, and decent readability scores.

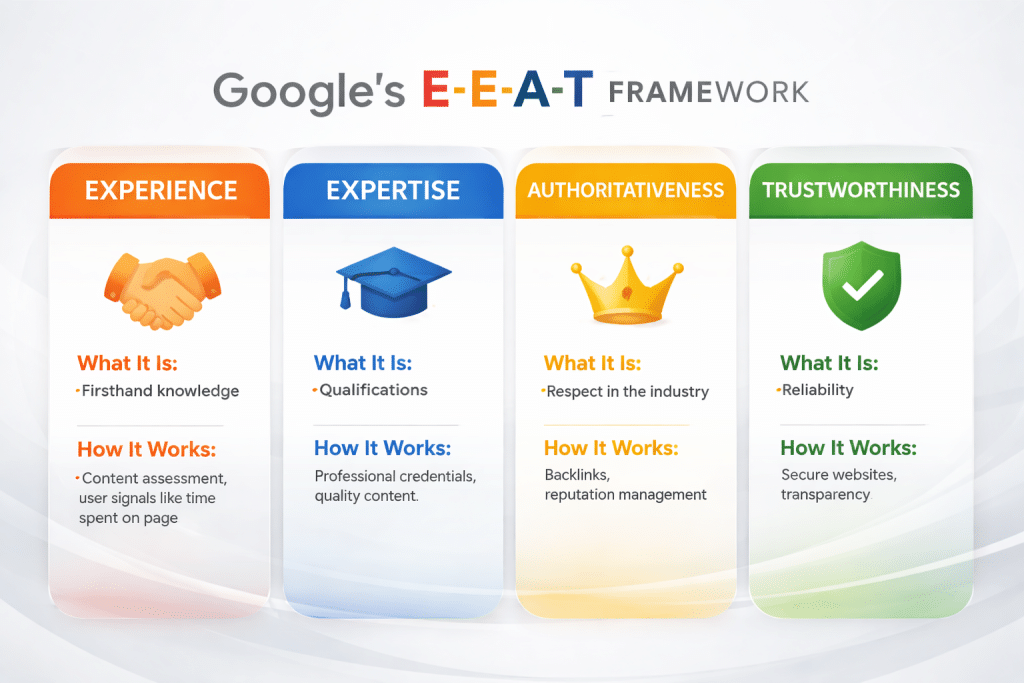

What Google does try to prioritize is something called E-E-A-T: Experience, Expertise, Authoritativeness, and Trustworthiness. But here’s the kicker: AI-generated content can fake most of these signals. It can cite sources (even if it hasn’t read them properly), include author bios (even if that author is fictional), and use confident language that sounds authoritative.

The result? You get search results that look professional but lack genuine human insight. They’re optimized for Google, not for you. It’s like going to a restaurant where everything on the menu looks delicious in the photos but tastes like cardboard because it was designed by a committee that never actually eats food.

What’s Coming Down the Pipeline

I wish I could tell you it’s going to get better, but I’d be lying. The future looks complicated, and I’m being generous with that word.

Google is now integrating its own AI directly into search results through something called the Search Generative Experience, or SGE. Instead of showing you links to websites, Google’s AI will generate an answer by synthesizing information from multiple sources. On the surface, this sounds convenient. In practice, it means you’re getting an AI’s interpretation of other content, which might itself be AI-generated. It’s AI all the way down, like some sort of digital turducken nobody asked for.

This creates a bizarre feedback loop. AI generates content, Google’s AI reads that content and generates summaries, which people then use to generate more AI content. We’re photocopying photocopies, and each generation gets blurrier and further from any original human insight.

Some search engines are trying different approaches. Brave Search emphasizes independent indexing. DuckDuckGo focuses on privacy. Perplexity AI attempts to provide sourced answers. But none have Google’s reach or resources, and they’re all grappling with the same fundamental problem: how do you find genuine human content in an ocean of synthetic text?

There’s also talk of blockchain-based verification systems for human-created content, digital signatures that prove a real person wrote something. But implementing this across the entire internet? That’s like trying to put toothpaste back in the tube while riding a bicycle. Backwards. In the rain.

The optimistic view is that Google will develop better AI detection methods and prioritize genuine human content. They’re certainly trying, or so they say. Recent algorithm updates claim to target AI spam. But it’s an arms race, and the spammers are well-funded and motivated.

The Dangers Lurking in the Shadows

Now, let’s talk about why this isn’t just annoying but potentially dangerous. When you can’t find reliable information, you can’t make informed decisions.

Medical information is particularly concerning. Imagine searching for symptoms or treatment options and getting AI-generated articles that sound authoritative but contain subtle inaccuracies. These articles pass Google’s checks because they’re well-written and cite sources, but they might be synthesizing information incorrectly or missing crucial context that a real doctor would include.

Financial advice is another minefield. AI-generated investment articles might sound sophisticated but lack the nuanced understanding of market conditions that comes from actual experience. Following this advice could cost you real money.

Then there’s the misinformation angle. AI can generate convincing fake reviews, fabricated news stories, and misleading product information at scale. When everything looks equally legitimate, how do you distinguish truth from fiction? You can’t just trust that the top Google result is reliable anymore.

There’s also a privacy concern that feeds into this. As Google struggles to identify quality content, they’re collecting more data about user behaviour to improve their algorithms. What you click, how long you stay on pages, what you search for next—all of this is being tracked and analysed. The irony is that in trying to solve the content quality problem, Google is potentially creating privacy vulnerabilities.

And here’s something that keeps me up at night: as AI-generated content becomes the norm, we’re losing institutional knowledge. When you search for how to fix a specific problem with a 1995 Toyota, you used to find forum posts from enthusiasts who’d actually done the work. Now you find AI-generated articles that have never touched a wrench. That human expertise is being buried, and once it’s gone, it’s gone.

Protecting Yourself in the Digital Wilderness

So what can you actually do about all this? I’m not going to leave you hanging with just problems and no solutions.

First, learn to spot the signs of AI generated content. It tends to be generic, covering topics broadly without deep insight. It often lacks personal anecdotes or specific examples. The writing might be technically correct but somehow soulless, like reading an instruction manual written by someone who’s never used the product.

Second, dig deeper than the first page of Google results. I know it’s tedious, but sometimes the good stuff is buried on page two or three, where real human-written content hasn’t been optimized to death.

Third, use specialized search techniques. Put phrases in quotation marks to search for exact matches. Use “site:” to search within specific trusted websites. Add “forum” or “Reddit” to your search to find actual human discussions. These tricks help you bypass some of the AI spam.

Fourth, diversify your information sources. Don’t rely solely on Google. Check multiple search engines. Go directly to trusted websites. Join online communities where real people share experiences. Yes, even Reddit, despite its flaws, often has more genuine information than the top Google results.

Fifth, be skeptical. If something sounds too polished, too perfect, too comprehensive, it might be AI-generated. Real human writing has personality, quirks, and sometimes admits uncertainty. If an article claims to definitively answer everything about a complex topic in 800 words, someone’s having you on.

Wrapping This Up

Look, I’m not going to sugarcoat this. The Google search quality decline is real, and the dead internet theory, while perhaps overstated, points to a genuine problem. We’re in a weird transitional period where AI generated content has flooded the internet faster than anyone can effectively filter it.

Google built an empire on helping people find information, but they’re now struggling with a monster partly of their own creation. By making their algorithm gameable and prioritizing engagement over quality, they’ve incentivized the very behavior that’s now degrading their service.

The internet was supposed to democratize information, giving everyone access to human knowledge and experience. Instead, we’re drowning in synthetic content optimized for algorithms rather than people. It’s like we’ve built a massive library where most of the books are written by machines that have never lived a human experience.

But here’s the thing: we’re not powerless. By being more critical consumers of information, by supporting genuinely human content creators, and by demanding better from the platforms we use, we can push back against this tide. The internet isn’t dead yet, but it’s definitely on life support, and we’re the ones who need to decide whether to pull the plug or fight for its recovery.

I still remember when Google felt like magic, when you could trust that the top result was probably what you needed. Maybe we can’t get back to that innocence, but we can at least fight for an internet where human voices aren’t drowned out by the endless chatter of machines talking to themselves.

And honestly? That’s worth fighting for. Because the alternative, an internet where everything is synthetic and nothing is genuine, where we can’t tell human from machine, truth from fabrication—that’s not an internet worth having. That’s just a very expensive, very complicated way of being more alone than ever.

So next time you search for something and the results feel off, trust that instinct. You’re not imagining it. The internet has changed, and not for the better. But knowing that, understanding how it works, and learning to navigate around it? That’s how we take back some control.

Now if you’ll excuse me, I need to go search for a decent recipe for shepherd’s pie, and I’m absolutely dreading what Google’s going to show me.

Walter

Leave a Reply