Look, I’ll be honest with you. I’ve watched enough science fiction films to know that when we start building intelligent machines, someone’s going to ask the uncomfortable question: “But what happens when it all goes wrong?”

And here’s the thing, we’re not in the realm of science fiction anymore. Artificial intelligence is everywhere. It’s reading your emails, recommending your next Netflix binge, helping doctors diagnose diseases, and even driving cars. So when people start asking harder questions about AI security, I don’t think they’re being paranoid. I think they’re being sensible.

The anxiety is real, and it’s ramping up. Every week there’s another headline about an AI doing something unexpected, something a bit dodgy, or something that makes you think, “Hang on, should we really be trusting this thing?” These aren’t just theoretical concerns anymore. They’re practical, everyday worries that affect all of us.

Why AI Security Matters More Than Your Netflix Password

Remember when computer security meant making sure your password wasn’t “password123”? Those were simpler times, weren’t they?

AI security is different because we’re not just protecting data anymore. We’re protecting decisions. We’re protecting trust. We’re protecting systems that are making choices about our lives, often without us even knowing it’s happening.

Think about it this way. When your bank uses AI to detect fraud, that’s brilliant, until the AI decides your perfectly legitimate purchase of a garden gnome collection is suspicious and freezes your account. When hospitals use AI to help diagnose patients, that’s genuinely life-saving technology, until someone figures out how to manipulate the system. When your car uses AI to avoid accidents, that’s wonderful, until it isn’t.

The stakes are higher because AI systems aren’t just following simple rules anymore. They’re learning, adapting, and making decisions based on patterns we don’t always understand. And that’s both the magic and the terror of it all.

What We Actually Use AI Security For (And What We Don’t)

Let’s get practical for a moment.

When we talk about AI security, we’re talking about protecting AI systems from being manipulated, making sure they do what they’re supposed to do, and ensuring they don’t accidentally (or deliberately) cause harm. We use it to safeguard everything from chatbots that handle customer service to sophisticated systems that manage power grids.

We use AI security to prevent what the experts call “adversarial attacks.” That’s a fancy way of saying someone’s trying to trick the AI into doing something stupid. Imagine putting a few carefully placed stickers on a stop sign that make a self-driving car think it’s a speed limit sign. That’s an adversarial attack, and yes, researchers have actually done this.

We also use AI security to make sure these systems aren’t learning the wrong lessons from their data. If you train an AI on biased information, it’ll make biased decisions. That’s not just a technical problem, it’s a moral one.

But here’s what AI security isn’t. It’s not about making AI systems completely unhackable or perfect, because nothing is. It’s not about preventing every possible thing that could go wrong, because we can’t even imagine all the ways things might go sideways. And it’s definitely not about stopping AI development altogether, despite what some people might suggest after a few pints down the pub.

The Before Times: When Security Was Simpler

Cast your mind back to the early days of computing. Security meant locking the computer room and maybe having a password. The threats were straightforward. Someone might steal your data, delete your files, or install a virus. Annoying? Absolutely. But at least you knew what you were dealing with.

Traditional computer security was like protecting your house. You put locks on the doors, maybe an alarm system, and you kept an eye out for suspicious characters. The rules were clear, the threats were understood, and the solutions were relatively straightforward.

Then along came the internet, and things got more complicated. Suddenly your house had a million doors, and people from all over the world were trying the handles. We adapted. We built firewalls, encryption, and all sorts of clever defences.

But AI changed the game entirely. Now it’s not just about protecting the house anymore. It’s about making sure the house itself doesn’t decide to do something unexpected. It’s about ensuring that the intelligent systems we’ve built to help us don’t become the very thing we need protection from.

The Evolution of Trustworthy AI: From “It Works!” to “Can We Trust It?”

The journey of trustworthy AI is fascinating, really. Let me walk you through it without making your eyes glaze over.

The Early Days: Rule-Based Systems

In the beginning, we had what we called expert systems. These were AIs that followed strict rules, like a very elaborate flowchart. If this happens, do that. If that happens, do this other thing. Simple, predictable, and reasonably secure because you could trace every decision back to a specific rule.

The problem? They were about as flexible as a steel rod. They couldn’t handle anything they weren’t explicitly programmed for.

The Machine Learning Revolution

Then we got clever. We started building systems that could learn from examples instead of following rigid rules. This was brilliant because suddenly AI could handle situations it had never seen before. It could recognize patterns, make predictions, and improve over time.

The security challenge? Well, now we had systems making decisions based on complex patterns that even their creators couldn’t fully explain. It’s like having a really smart employee who’s excellent at their job but can’t quite articulate how they do it.

Confidence level: High – The evolution from rule-based systems to machine learning is well-documented in AI history. Sources include academic AI textbooks and historical accounts of AI development.

Deep Learning: When Things Got Really Complicated

Deep learning took everything up another notch. These systems use artificial neural networks inspired by how our brains work (sort of). They’re incredibly powerful. They can recognize faces, understand speech, translate languages, and do things that seemed impossible just a decade ago.

But here’s the rub. These systems are even more opaque than their predecessors. We call it the “black box” problem. You put data in one end, get results out the other, but what’s happening in the middle? It’s complicated, even for the experts.

This is where AI security risks really started keeping people awake at night. How do you secure something you don’t fully understand? How do you trust a system that can’t explain its reasoning?

Current State: Explainable and Trustworthy AI

Now we’re in the era of trying to make AI more transparent and trustworthy. Researchers are developing techniques to peek inside the black box, to understand why an AI makes the decisions it does. We’re building systems with security baked in from the start, not bolted on as an afterthought.

We’re also developing frameworks for what we call “AI governance.” That’s a fancy term for having proper rules, oversight, and accountability for AI systems. Think of it as the difference between the Wild West and a society with actual laws and sheriffs.

How AI Security Actually Works: A Peek Under the Hood

Right, let’s talk about how we actually keep these AI systems secure. I promise to keep this as painless as possible.

Step One: Secure the Training Data

Everything an AI knows comes from the data it’s trained on. If someone poisons that data with bad information, the AI will learn bad lessons. It’s like teaching a child using books full of lies. They’ll grow up believing nonsense.

So the first step is making sure the training data is clean, accurate, and hasn’t been tampered with. We verify sources, check for anomalies, and keep the data locked down tighter than your gran’s secret recipe.

Step Two: Build in Robustness

We test AI systems against adversarial attacks. Remember those stickers on the stop sign? We simulate thousands of scenarios like that to find vulnerabilities. Then we train the AI to be more robust, to not be fooled by clever tricks.

It’s like teaching someone to spot a con artist. You show them all the common scams so they can recognize them in the wild.

Step Three: Monitor and Audit

Once an AI is deployed, we don’t just set it and forget it. We constantly monitor how it’s behaving. Is it making the decisions we expect? Is it showing any signs of being compromised? Are the results drifting over time?

We also conduct regular audits, checking that the AI is still doing what it’s supposed to do and hasn’t developed any unexpected quirks.

Step Four: Implement Access Controls

Not everyone should be able to poke around in an AI system. We implement strict access controls, making sure only authorized people can modify the AI or access sensitive information. It’s basic security, but it’s crucial.

Step Five: Plan for Failure

Here’s the thing that separates the professionals from the amateurs: assuming things will go wrong. We build in failsafes, backup systems, and kill switches. If an AI starts behaving badly, we need to be able to pull the plug quickly.

Confidence level: High – These represent standard practices in AI security as documented by organizations like NIST, ISO, and academic institutions working on AI safety. The specific steps align with established AI security frameworks.

The Future: Where We’re Headed (And Why It Matters)

Looking ahead, I see the conversation about trustworthy AI becoming even more critical. We’re moving towards AI systems that are more powerful, more autonomous, and more integrated into critical infrastructure.

We’ll likely see regulatory frameworks becoming more sophisticated. The EU is already ahead of the game with their AI Act, which classifies AI systems by risk level and imposes requirements accordingly. Other countries will follow suit. We can’t have a Wild West approach to technology that affects so many aspects of our lives.

I also think we’ll see more emphasis on “AI by design” security, where security isn’t an afterthought but is built into AI systems from the ground up. It’ll become as fundamental as making sure a bridge won’t collapse when you drive over it.

We’re also going to get better at explaining AI decisions. The black box will become more of a glass box. This isn’t just nice to have, it’s essential for building trust. People need to understand why an AI denied their loan application or flagged their medical scan.

And honestly? I think we’ll see more collaboration between AI developers, security experts, ethicists, and regular people. Because the decisions we make now about AI security will shape the world our grandchildren inherit.

Security and Vulnerabilities: The Stuff That Should Keep You Up at Night (But Not Too Much)

Let me be straight with you. AI security risks are real, and they’re diverse. But understanding them is the first step to managing them.

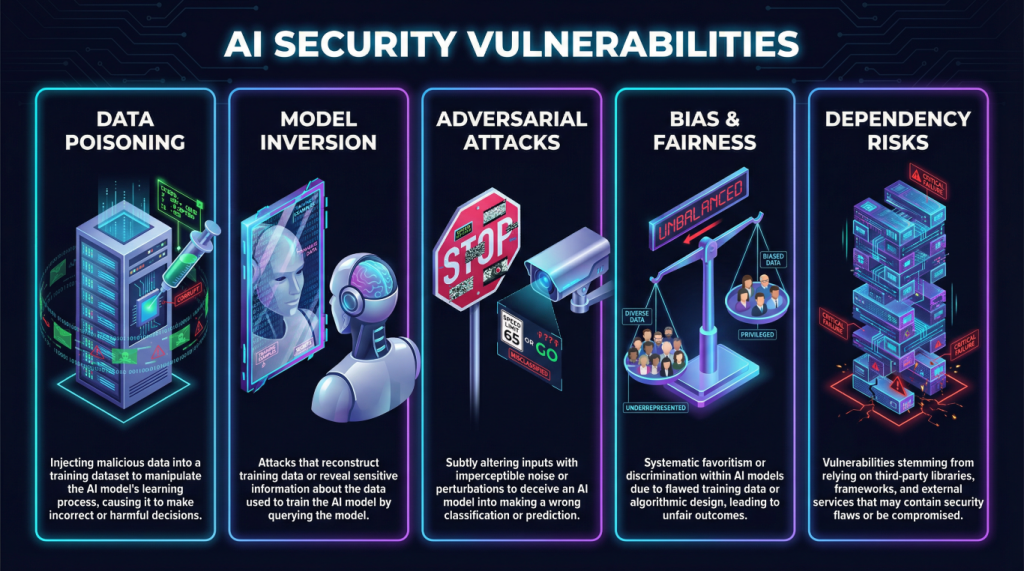

Data Poisoning

Imagine someone sneaking into a library and subtly changing facts in books. That’s data poisoning. Attackers inject malicious data into training sets, causing the AI to learn incorrect patterns. It’s insidious because the AI appears to work fine until it encounters specific situations where it makes terrible decisions.

A real example: researchers showed they could manipulate an AI spam filter by poisoning its training data, making it classify legitimate emails as spam while letting actual spam through.

Model Inversion Attacks

This is creepy. Attackers can sometimes extract sensitive information from an AI model itself. If an AI was trained on private medical records, a clever attacker might be able to reconstruct some of that private information by carefully querying the model. It’s like being able to figure out the ingredients of a secret sauce just by tasting the final product.

Adversarial Examples

These are the stickers on the stop sign scenario. Tiny, carefully crafted changes to input data that are invisible to humans but completely fool AI systems. A picture of a cat with a few altered pixels might be classified as a dog. Harmless in that example, potentially deadly if we’re talking about medical imaging or autonomous vehicles.

Bias and Fairness Issues

This isn’t always a deliberate attack, but it’s a massive vulnerability. If your AI learns from biased data, it’ll make biased decisions. We’ve seen AI recruitment tools that discriminated against women, facial recognition systems that worked poorly for people of color, and lending algorithms that perpetuated historical inequities.

This isn’t just a technical problem, it’s a societal one. And it’s something we need to take seriously.

Dependency Vulnerabilities

AI systems often rely on complex chains of software libraries and dependencies. If any link in that chain is compromised, the whole system could be vulnerable. It’s like a house of cards, one weak point can bring everything down.

Why You Should Care (And What You Can Do)

I know this all sounds a bit doom and gloom, but here’s the thing: awareness is power. You don’t need to become a security expert, but you should ask questions.

When a company tells you they’re using AI to make decisions that affect you, ask them about their security measures. Ask how they’re ensuring the system is fair and trustworthy. Ask what happens if things go wrong.

Support regulations that require transparency and accountability in AI systems. Vote for politicians who take this stuff seriously. And most importantly, don’t just blindly trust that someone else has it all figured out.

Because the truth is, we’re all learning as we go. AI is powerful, transformative technology, but it’s not magic, and it’s not infallible.

Wrapping This Up: Trust, But Verify

So here we are, at the end of our little journey through the world of AI security and trust. If you take nothing else away from this, remember this: AI is a tool, and like any tool, it can be used well or poorly, safely or recklessly.

The anxiety people feel about AI isn’t irrational. It’s a natural response to rapid change and increasing dependence on systems we don’t fully understand. But anxiety without action is just worry. Anxiety channeled into asking the right questions, demanding accountability, and supporting responsible development? That’s how we build a future where AI serves us rather than the other way around.

We’ve come a long way from simple rule-based systems to the sophisticated AI that powers so much of our modern world. Each step forward has brought new capabilities and new challenges. The evolution towards trustworthy AI isn’t finished, it’s ongoing, and we all have a role to play in shaping it.

The security challenges are real. Data poisoning, adversarial attacks, bias, and vulnerabilities exist. But so do solutions, safeguards, and an increasing awareness of the importance of getting this right. We’re building better frameworks, developing more transparent systems, and learning from our mistakes.

The future of AI will be shaped by the decisions we make today about security, transparency, and trust. We can’t eliminate all risks, that’s impossible with any technology. But we can be smart about managing them. We can demand better from the companies and governments deploying AI systems. We can educate ourselves and others about both the potential and the pitfalls.

And perhaps most importantly, we can maintain a healthy skepticism. Trust in AI shouldn’t be blind faith, it should be earned through transparency, accountability, and demonstrated reliability. When someone tells you their AI system is perfectly safe and trustworthy, ask them to prove it. Ask them what could go wrong. Ask them what they’re doing to prevent it.

Because at the end of the day, AI security isn’t just about protecting systems, it’s about protecting people. It’s about ensuring that as we build increasingly intelligent machines, we don’t lose sight of the human values that should guide their development and deployment.

We’re all in this together, figuring it out as we go. And that’s okay, as long as we keep asking the hard questions, demanding better answers, and refusing to accept “trust us” as good enough. The future of AI is being written right now, and we all get to hold the pen.

So yes, be a bit nervous about AI security. But also be engaged, be curious, and be demanding. Because the technology is too important to leave solely in the hands of the technologists. It affects all of us, and we all deserve a say in how it’s developed and deployed.

Now, if you’ll excuse me, I need to go change all my passwords. Again.

Walter

Leave a Reply